In case you’re not yet tired of reading about AI here and there, here’s one more article on this topic.

Recently, I came across a blog post by Picovoice about their picoLLM Inference Engine SDK for running Large Language Models (LLMs) locally. As the title suggests, I decided to try it out, but with a twist — launching it from a Salesforce Experience Cloud site.

This article demonstrates how to implement this using the picoLLM Inference Engine SDK. We’ll cover the initial setup, configuration, and coding required to achieve this integration.

Demo on Mac and Windows

Initial Configuration

Before we begin, there are a few configuration steps to complete.

Obtain a Picovoice AccessKey

Create a Picovoice account and obtain the AccessKey at the console page.

Download LLM models

Navigate to the picoLLM page and download a model of your choice. To get started, I recommend downloading either a Gemma or Phi2 model with chat capabilities. These are the most lightweight and fastest options available.

Enable Digital Experiences and create a Site

The LLM will run from the Experience Site. For this we need to enable the feature in the org by performing a few quick steps from the official guide. After enabling the feature, create a new site. Choose the Experience of your choice for the new site. For the example in this article, the Customer Service one is used.

Disable Lightning Locker

Disable Lightning Locker for the Experience Site, as it restricts access to the window object in LWC.

Add Trusted URLs

To add the ability to our code to access the Unpkg and Pico related URLs, we need to add them to Trusted URLs in the org.

Unpkg

- API Name: Unpkg;

- Active: checked;

- URL:

unpkg.com; - CSP Context: Experience Builder Sites;

- CSP Directives to check:

connect-src (scripts).

Pico

- API Name: Pico;

- Active: checked;

- URL:

kmp1.picovoice.net; - CSP Context: Experience Builder Sites;

- CSP Directives to check:

connect-src (scripts).

Add Trusted Sites for Scripts

The link to the npm module should be added as a Trusted Site for Script.

Unpkg

- Name: Unpkg

- URL:

https://unpkg.com/@picovoice/picollm-web@1.0.7/dist/esm/index.js - Active: checked

Let’s start coding

Create a static resource

The @picovoice/picollm-web npm package needs to be used. Since dynamic imports from external sources are not supported by Lightning Web Components (LWC), a static resource should be created to import the module and expose it to LWC as a class.

Create the static resource

Open your terminal in the SFDX project directory and run:

*to see the full code row, please scroll right

sf static-resource generate -n PicoSDK --type application/javascript -d force-app/main/default/staticresources/This will create PicoSDK.js in the force-app/main/default/staticresources/ directory.

Import the Pico SDK and expose it to LWC

Open force-app/main/default/staticresources/PicoSDK.js and add the following code (replace ACCESS_KEY with the one you obtained previously):

PicoSDK.js

const MODULE_URL =

"https://unpkg.com/@picovoice/picollm-web@1.0.7/dist/esm/index.js?module";

const ACCESS_KEY = "YOUR_ACCESS_KEY";

class Pico {

PicoLLMWorker;

_picoSdk;

constructor() {

return this;

}

async loadSdk() {

this._picoSdk = await import(MODULE_URL);

}

async loadWorker(model) {

this.PicoLLMWorker = await this._picoSdk.PicoLLMWorker.create(

ACCESS_KEY,

{

modelFile: model

}

);

}

}

(() => {

window.Pico = new Pico();

})();- The aforementioned Unpkg tool acts as a CDN, allowing direct access to the PicoLLM Web SDK without needing to install it locally.

- The

loadSdk()method uses a dynamic import to fetch the SDK from Unpkg. - A new

Picoinstance is created and assigned towindow.Pico, making it globally accessible for use in LWC.

Create a Lightning Web Component

A new Lightning Web Component should be created. Let’s name it LocalLlmPlayground. The LWC bundle files should be updated to include the following code.

localLlmPlayground.js-meta.xml

<?xml version="1.0" encoding="UTF-8"?>

<LightningComponentBundle xmlns="http://soap.sforce.com/2006/04/metadata">

<apiVersion>61.0</apiVersion>

<isExposed>true</isExposed>

<masterLabel>Local LLM Playground</masterLabel>

<targets>

<target>lightningCommunity__Page</target>

<target>lightningCommunity__Default</target>

</targets>

</LightningComponentBundle>Now, the component can be deployed to Salesforce org. Drag and drop the component onto any Experience Site page, and publish the Site.

If you encounter the error message LWC1702: Invalid LWC imported identifier "createElement" (1:9) during deployment, delete the __tests__ directory inside the component bundle and try deploying again.

Adding a Basic HTML Structure

localLlmPlayground.html

<template>

<div class="slds-is-relative">

<div class="slds-grid slds-gutters">

<!-- CONFIGURATION -->

<div class="slds-col slds-large-size_4-of-12">

<div class="slds-card slds-p-horizontal_medium">

<div class="slds-text-title_caps slds-m-top_medium">

Configuration

</div>

<div class="slds-card__body">

<lightning-textarea data-name="system-prompt" label="System Prompt">

</lightning-textarea>

<lightning-input type="file" label="Model" accept="pllm" class="slds-m-vertical_medium">

</lightning-input>

<lightning-input type="number" data-name="completionTokenLimit" label="Completion Token Limit"

value="128" class="slds-m-vertical_medium">

</lightning-input>

<lightning-slider data-name="temperature" label="Temperature" value=".5" max="1" min="0.1"

step="0.1">

</lightning-slider>

</div>

</div>

</div>

<!-- CHAT -->

<div class="slds-col slds-large-size_8-of-12">

<div class="slds-card slds-p-horizontal_medium">

<div class="slds-text-title_caps slds-m-top_medium">Chat</div>

<div class="slds-card__body">

<section role="log" class="slds-chat slds-box">

<ul class="slds-chat-list">

<!-- MESSAGES WILL APPEAR HERE -->

</ul>

</section>

<div class="slds-media slds-comment slds-hint-parent">

<div class="slds-media__figure">

<span class="slds-avatar slds-avatar_medium">

<lightning-icon icon-name="standard:live_chat"></lightning-icon>

</span>

</div>

<div class="slds-media__body">

<div class="slds-publisher slds-publisher_comment slds-is-active">

<textarea data-name="user-prompt"

class="slds-publisher__input slds-input_bare slds-text-longform">

</textarea>

<!-- CONTROLS -->

<div class="slds-publisher__actions slds-grid slds-grid_align-end">

<ul class="slds-grid"></ul>

<lightning-button class="slds-m-right_medium" label="Release Resources"

variant="destructive-text" icon-name="utility:delete">

</lightning-button>

<lightning-button class="slds-m-right_medium" variant="brand-outline"

label="Stop Generation" icon-name="utility:stop">

</lightning-button>

<lightning-button variant="brand" label="Generate" icon-name="utility:sparkles">

</lightning-button>

</div>

</div>

</div>

</div>

</div>

</div>

</div>

</div>

</div>

</template>localLlmPlayground.css

.slds-chat {

height: 50vh;

overflow-y: auto;

}The localLlmPlayground.css file should be manually created inside the component bundle to increase the chat element height and add scrolling.

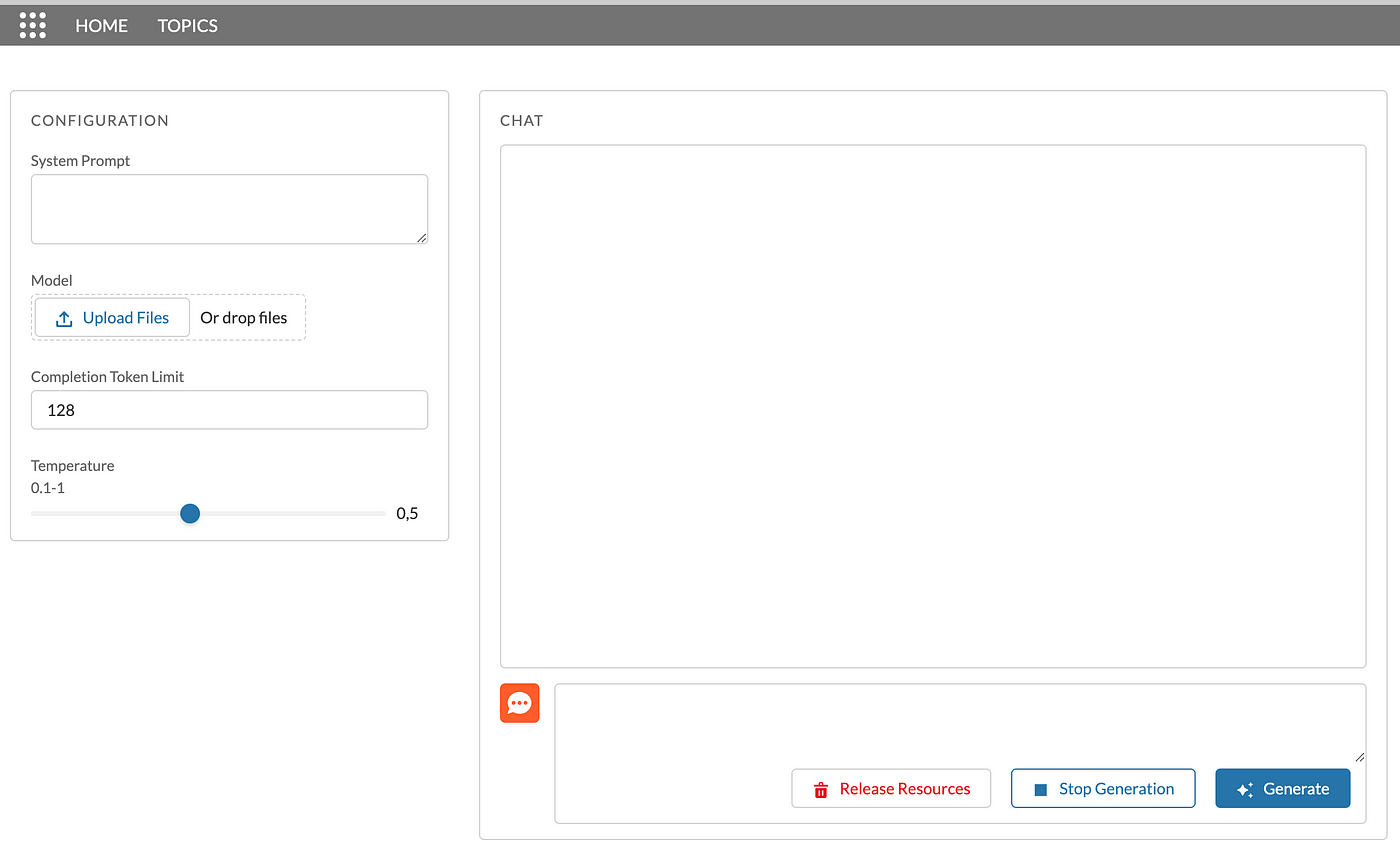

After deploying the bundle and refreshing the page, it should appear similar to the image below.

To view the result:

- Navigate to Setup > All Sites

- Click on the URL of the Experience Site you created

If you don’t see any changes, your page might be cached. To ensure you’re viewing the latest version when refreshing the Experience Site page:

- Perform a hard reload of the page, or

- Enable Debug Mode for your user

Loading Pico SDK from the Static Resource

localLlmPlayground.js

import { LightningElement } from "lwc";

import PicoSDK from "@salesforce/resourceUrl/PicoSDK";

import { loadScript } from "lightning/platformResourceLoader";

export default class LocalLlmPlayground extends LightningElement {

async connectedCallback() {

await loadScript(this, PicoSDK);

window.Pico.loadSdk();

}

}await loadScript(this, Pico);— loads thePicoSDK.jsfile from static resources, making thePicoclass available through thewindowobject.

Adding chat.js File for Message Rendering

chat.js

export const MessageType = {

INBOUND: "inbound",

OUTBOUND: "outbound"

};

const createAvatarElement = (messageElement, avatarIcon) => {

const avatarWrapper = document.createElement("span");

avatarWrapper.setAttribute("aria-hidden", "true");

avatarWrapper.classList.add(

"slds-avatar",

"slds-avatar_circle",

"slds-chat-avatar"

);

const avatarInitials = document.createElement("span");

avatarInitials.classList.add(

"slds-avatar__initials",

"slds-avatar__initials_inverse"

);

avatarInitials.innerText = avatarIcon;

avatarWrapper.appendChild(avatarInitials);

messageElement.appendChild(avatarWrapper);

};

const createChatElements = (component, messageType) => {

const chatContainer = component.template.querySelector(".slds-chat");

const listItem = document.createElement("li");

listItem.classList.add(

"slds-chat-listitem",

`slds-chat-listitem_${messageType}`

);

const messageWrapper = document.createElement("div");

messageWrapper.classList.add("slds-chat-message");

createAvatarElement(

messageWrapper,

messageType === MessageType.INBOUND ? "🤖" : "🧑💻"

);

return { chatContainer, listItem, messageWrapper };

};

const createMessageBodyElements = (messageType) => {

const messageBody = document.createElement("div");

messageBody.classList.add("slds-chat-message__body");

const messageText = document.createElement("div");

messageText.classList.add(

"slds-chat-message__text",

`slds-chat-message__text_${messageType}`

);

return { messageBody, messageText };

};

const appendMessageText = (message, messageTextElement) => {

if (!message) return;

const textElement = document.createElement("span");

textElement.innerText = message;

messageTextElement.appendChild(textElement);

};

export const renderMessage = (component, messageType, message) => {

const { chatContainer, listItem, messageWrapper } = createChatElements(

component,

messageType

);

const { messageBody, messageText } = createMessageBodyElements(messageType);

appendMessageText(message, messageText);

messageBody.appendChild(messageText);

messageWrapper.appendChild(messageBody);

listItem.appendChild(messageWrapper);

const fragment = document.createDocumentFragment();

fragment.appendChild(listItem);

chatContainer.appendChild(fragment);

return messageText;

};The chat.js file should be manually created inside the component bundle, and the following code should be pasted into it. It handles rendering inbound and outbound messages inside the div.slds-chat element when a user writes a prompt and the LLM generates a response, and is based on the chat component from SLDS.

After creating the file, the component bundle structure should look like this:

localLlmPlayground/

├─ chat.js

├─ localLlmPlayground.css

├─ localLlmPlayground.html

├─ localLlmPlayground.js

├─ localLlmPlayground.js-meta.xml

Implementing User Input Collection and LLM Response Generation

localLlmPlayground.html

<template>

<div class="slds-is-relative">

<!-- ERROR MESSAGE -->

<div lwc:if={errorMessage} class="slds-notify slds-notify_alert slds-alert_error" role="alert">

<span class="slds-assistive-text">error</span>

<h2>{errorMessage}</h2>

</div>

<div class="slds-grid slds-gutters">

<!-- CONFIGURATION -->

<div class="slds-col slds-large-size_4-of-12">

<div class="slds-card slds-p-horizontal_medium">

<div class="slds-text-title_caps slds-m-top_medium">

Configuration

</div>

<div class="slds-card__body">

<lightning-textarea data-name="system-prompt" label="System Prompt">

</lightning-textarea>

<lightning-input type="file" label="Model" onchange={loadModel} accept="pllm"

class="slds-m-vertical_medium">

</lightning-input>

<lightning-badge lwc:if={modelName} label={modelName}></lightning-badge>

<lightning-input type="number" data-name="completionTokenLimit" label="Completion Token Limit"

value="128" class="slds-m-vertical_medium">

</lightning-input>

<lightning-slider data-name="temperature" label="Temperature" value=".5" max="1" min="0.1"

step="0.1">

</lightning-slider>

</div>

</div>

</div>

<!-- CHAT -->

<div class="slds-col slds-large-size_8-of-12">

<div class="slds-card slds-p-horizontal_medium">

<div class="slds-text-title_caps slds-m-top_medium">Chat</div>

<div class="slds-card__body">

<section role="log" class="slds-chat slds-box">

<ul class="slds-chat-list">

<!-- MESSAGES WILL APPEAR HERE -->

</ul>

</section>

<div class="slds-media slds-comment slds-hint-parent">

<div class="slds-media__figure">

<span class="slds-avatar slds-avatar_medium">

<lightning-icon icon-name="standard:live_chat"></lightning-icon>

</span>

</div>

<div class="slds-media__body">

<div class="slds-publisher slds-publisher_comment slds-is-active">

<textarea data-name="user-prompt"

class="slds-publisher__input slds-input_bare slds-text-longform">

</textarea>

<!-- CONTROLS -->

<div class="slds-publisher__actions slds-grid slds-grid_align-end">

<ul class="slds-grid"></ul>

<lightning-button class="slds-m-right_medium" label="Release Resources"

variant="destructive-text" icon-name="utility:delete"

onclick={releaseResources}>

</lightning-button>

<lightning-button class="slds-m-right_medium" variant="brand-outline"

label="Stop Generation" icon-name="utility:stop" onclick={stopGeneration}>

</lightning-button>

<lightning-button variant="brand" label="Generate" icon-name="utility:sparkles"

onclick={generate} disabled={isGenerating}>

</lightning-button>

</div>

</div>

</div>

</div>

</div>

</div>

</div>

</div>

</div>

</template>localLlmPlayground.js

import { LightningElement } from "lwc";

import Pico from "@salesforce/resourceUrl/Pico";

import { loadScript } from "lightning/platformResourceLoader";

import * as chat from "./chat.js";

export default class LocalLlmPlayground extends LightningElement {

isGenerating = false;

isStoppedGenerating = false;

errorMessage;

modelName;

dialog;

isWorkerCreated;

async connectedCallback() {

try {

await loadScript(this, Pico);

window.Pico.loadSdk();

} catch (error) {

this.errorMessage = error.message;

}

}

async loadModel(event) {

try {

this.model = event.target.files[0];

this.modelName = this.model.name;

} catch (error) {

this.errorMessage = error.message;

}

}

async generate() {

this.errorMessage = "";

this.isGenerating = true;

try {

const { userInput, tokenLimit, temp } = this.collectInputValues();

chat.renderMessage(

this,

chat.MessageType.OUTBOUND,

userInput.value

);

if (!this.isWorkerCreated) {

await this.createPicoLlmWorker();

}

await this.dialog.addHumanRequest(userInput.value);

userInput.value = "";

await this.generateResponse(tokenLimit, temp);

} catch (error) {

this.errorMessage = error.message;

} finally {

this.isGenerating = false;

this.isStoppedGenerating = false;

}

}

collectInputValues() {

return {

userInput: this.template.querySelector("[data-name='user-prompt']"),

tokenLimit: this.template.querySelector(

"[data-name='completionTokenLimit']"

).value,

temp: this.template.querySelector("[data-name='temperature']").value

};

}

async createPicoLlmWorker() {

if (window.Pico.PicoLLMWorker) {

await window.Pico.PicoLLMWorker.release();

}

await window.Pico.loadWorker(this.model);

const systemPrompt = this.template.querySelector(

"[data-name='system-prompt']"

).value;

this.dialog = await window.Pico.PicoLLMWorker.getDialog(

undefined,

0,

systemPrompt

);

this.isWorkerCreated = true;

}

async generateResponse(tokenLimit, temp) {

const msgInbound = chat.renderMessage(this, chat.MessageType.INBOUND);

const { completion } = await window.Pico.PicoLLMWorker.generate(

this.dialog.prompt(),

{

tokenLimit,

temp,

streamCallback: (token) => {

this.streamLlmResponse(token, msgInbound);

}

}

);

await this.dialog.addLLMResponse(completion);

}

streamLlmResponse(token, msgInbound) {

if (!token || this.isStoppedGenerating) return;

msgInbound.innerText += token;

this.template.querySelector(".slds-chat").scrollBy(0, 32);

}

async releaseResources() {

try {

await window.Pico.PicoLLMWorker.release();

const databaseDeletionRequest = indexedDB.deleteDatabase("pv_db");

databaseDeletionRequest.onerror = (event) => {

this.errorMessage(event.target.error);

};

databaseDeletionRequest.onblocked = (event) => {

this.errorMessage(event.target.error);

};

} catch (error) {

this.errorMessage = error.message;

}

}

stopGeneration() {

this.isStoppedGenerating = true;

this.isGenerating = false;

}

}- The

loadModelmethod is triggered when a model is uploaded via thelightning-inputof type ‘file’. The model is then saved for later use. - The

generatemethod is the main method in the component, performing the majority of the work. It collects user input, renders chat messages, and streams the response generated by the LLM. - The line

await this.dialog.addHumanRequest(userInput.value);adds the user’s prompt to thedialogobject. This allows the model to, theoretically, maintain awareness of the conversation context. - The

createPicoLlmWorker()method creates an instance of thePicoLLMWorkerclass, which accepts the system prompt provided by the user, loads the models, generates the responses, and performs all the heavy lifting. - The

generateResponse()method renders an incoming chat message and updates its inner text with each new token streamed from theworker, respecting the system prompt, token limit and temperature options. The token limit and temperature options can be updated before generating each new response. However, changing the system prompt requires reloading the worker. - The

releaseResources()method removes the model fromindexedDBand releases all resources consumed by theworker.

Now, the component should produce responses. To test it, perform the next steps:

- Upload a model.

- Type a user prompt.

- Press the Generate button and wait for the response to be fully generated.

- When you have finished testing, press the Release Resources button.

Spinners!

Finally, let’s enhance the user experience by adding code to display a spinner while the model is loading and a worker is being created.

localLlmPlayground.html

...

<div class="slds-grid slds-gutters">

<!-- SPINNER -->

<lightning-spinner lwc:if={isLoading} alternative-text="Loading" size="large" variant="brand">

</lightning-spinner>

<!-- CONFIGURATION -->

<div class="slds-col slds-large-size_4-of-12">

...Add the following code between the div.slds-grid and configuration elements.

localLlmPlayground.js

isLoading = true;

/* OTHER CODE */

async connectedCallback() {

try {

await loadScript(this, Pico);

window.Pico.loadSdk();

this.isLoading = false;

} catch (error) {

this.errorMessage = error.message;

this.isLoading = false;

}

}

async loadModel(event) {

this.isLoading = true;

try {

this.model = event.target.files[0];

this.modelName = this.model.name;

} catch (error) {

this.errorMessage = error.message;

} finally {

this.isLoading = false;

}

}

async generate() {

this.errorMessage = "";

this.isGenerating = true;

this.isLoading = true;

try {

const { userInput, tokenLimit, temp } = this.collectInputValues();

chat.renderMessage(

this,

chat.MessageType.OUTBOUND,

userInput.value

);

if (!this.isWorkerCreated) {

await this.createPicoLlmWorker();

}

this.isLoading = false;

await this.dialog.addHumanRequest(userInput.value);

userInput.value = "";

await this.generateResponse(tokenLimit, temp);

} catch (error) {

this.errorMessage = error.message;

} finally {

this.isGenerating = false;

this.isStoppedGenerating = false;

}

}

/* OTHER CODE */

async releaseResources() {

this.isLoading = true;

try {

await window.Pico.PicoLLMWorker.release();

const databaseDeletionRequest = indexedDB.deleteDatabase("pv_db");

databaseDeletionRequest.onerror = (event) => {

this.errorMessage(event.target.error);

};

databaseDeletionRequest.onblocked = (event) => {

this.errorMessage(event.target.error);

};

} catch (error) {

this.errorMessage = error.message;

} finally {

this.isLoading = false;

}

}

/* OTHER CODE */Add Try-Catch-Finally blocks and code to toggle the isLoading class field value.

Final Result and Source Code

Final Result

The result should look like the GIF below and be able to:

- Accept user input.

- Generate responses.

- Stop response generation.

- Release resources consumed by the worker.

Source Code

The source code is available on GitHub.

![How to Increase Membership in Salesforce: 7 Unconventional Strategies [Higher Education Edition]](https://advancedcommunities.com/wp-content/uploads/2024/08/how-to-increase-memebrship-in-sf-higher-education.png)